The Good, The Bad, And The Stupid In Meta’s New Content Moderation Policies

from the misinformation-about-disinformation dept

When the NY Times declared in September that “Mark Zuckerberg is Done With Politics,” it was obvious this framing was utter nonsense. It was quite clear that Zuckerberg was in the process of sucking up to Republicans after Republican leaders spent the past decade using him as a punching bag on which they could blame all sorts of things (mostly unfairly).

Now, with Trump heading back to the White House and Republicans controlling Congress, Zuck’s desperate attempts to appease the GOP have reached new heights of absurdity. The threat from Trump that he wanted Zuckerberg to be jailed over a made-up myth that Zuckerberg helped get Biden elected only seemed to cement that the non-stop scapegoating of Zuck by the GOP had gotten to him.

Since the election, Zuckerberg has done everything he can possibly think of to kiss the Trump ring. He even flew all the way from his compound in Hawaii to have dinner at Mar-A-Lago with Trump, before turning around and flying right back to Hawaii. In the last few days, he also had GOP-whisperer Joel Kaplan replace Nick Clegg as the company’s head of global policy. On Monday it was announced that Zuckerberg had also appointed Dana White to Meta’s board. White is the CEO of UFC, but also (perhaps more importantly) a close friend of Trump’s.

All this seems pretty damn political.

Then, on Tuesday morning, Zuckerberg posted a video about the changing content moderation practices across Meta’s various properties.

Some of the negative reactions to the video are a bit crazy, as I doubt the changes are going to have that big of an impact. Some of them may even be sensible. But let’s break them down into three categories: the good, the bad, and the stupid.

The Good

Zuckerberg is exactly right that Meta has been really bad at content moderation, despite having the largest content moderation team out there. In just the last few months, we’ve talked about multiple stories showcasing really, really terrible content moderation systems at work on various Meta properties. There was the story of Threads banning anyone who mentioned Hitler, even to criticize him. Or banning anyone for using the word “cracker” as a potential slur.

It was all a great demonstration for me of Masnick’s Impossibility Theorem of dealing with content moderation at scale, and how mistakes are inevitable. I know that people within Meta are aware of my impossibility theorem, and have talked about it a fair bit. So, some of this appears to be them recognizing that it’s a good time to recalibrate how they handle such things:

In recent years we’ve developed increasingly complex systems to manage content across our platforms, partly in response to societal and political pressure to moderate content. This approach has gone too far. As well-intentioned as many of these efforts have been, they have expanded over time to the point where we are making too many mistakes, frustrating our users and too often getting in the way of the free expression we set out to enable. Too much harmless content gets censored, too many people find themselves wrongly locked up in “Facebook jail,” and we are often too slow to respond when they do.

Leaving aside (for now) the use of the word “censored,” much of this isn’t wrong. For years it felt that Meta was easily pushed around on these issues and did a shit job of explaining why it did things, instead responding reactively to the controversy of the day.

And, in doing so, it’s no surprise that as the complexity of its setup got worse and worse, its systems kept banning people for very stupid reasons.

It actually is a good idea to seek to fix that, and especially if part of the plan is to be more cautious in issuing bans, it seems somewhat reasonable. As Zuckerberg announced in the video:

We used to have filters that scanned for any policy violation. Now, we’re going to focus those filters on tackling illegal and high-severity violations, and for lower-severity violations, we’re going to rely on someone reporting an issue before we take action. The problem is that the filters make mistakes, and they take down a lot of content that they shouldn’t. So, by dialing them back, we’re going to dramatically reduce the amount of censorship on our platforms. We’re also going to tune our content filters to require much higher confidence before taking down content. The reality is that this is a trade-off. It means we’re going to catch less bad stuff, but we’ll also reduce the number of innocent people’s posts and accounts that we accidentally take down.

Zuckerberg’s announcement is a tacit admission that Meta’s much-hyped AI is simply not up to the task of nuanced content moderation at scale. But somehow that angle is getting lost amidst the political posturing.

Some of the other policy changes also don’t seem all that bad. We’ve been mocking Meta for its “we’re downplaying political content” stance from the last few years as being just inherently stupid, so it’s nice in some ways to see them backing off of that (though the timing and framing of this decision we’ll discuss in the latter sections of this post):

We’re continually testing how we deliver personalized experiences and have recently conducted testing around civic content. As a result, we’re going to start treating civic content from people and Pages you follow on Facebook more like any other content in your feed, and we will start ranking and showing you that content based on explicit signals (for example, liking a piece of content) and implicit signals (like viewing posts) that help us predict what’s meaningful to people. We are also going to recommend more political content based on these personalized signals and are expanding the options people have to control how much of this content they see.

Finally, most of the attention people have given to the announcement has focused on the plan to end the fact-checking program, with a lot of people freaking out about it. I even had someone tell me on Bluesky that Meta ending its fact-checking program was an “existential threat” to truth. And that’s nonsense. The reality is that fact-checking has always been a weak and ineffective band-aid to larger issues. We called this out in the wake of the 2016 election.

This isn’t to say that fact-checking is useless. It’s helpful in a limited set of circumstances, but too many people (often in the media) put way too much weight on it. Reality is often messy, and the very setup of “fact checking” seems to presume there are “yes/no” answers to questions that require a lot more nuance and detail. Just as an example of this, during the run-up to the election, multiple fact checkers dinged Democrats for calling Project 2025 “Trump’s plan”, because Trump denied it and said he had nothing to do with it.

But, of course, since the election, Trump has hired on a bunch of the Project 2025 team, and they seem poised to enact much of the plan. Many things are complex. Many misleading statements start with a grain of truth and then build a tower of bullshit around it. Reality is not about “this is true” or “this is false,” but about understanding the degrees to which “this is accurate, but doesn’t cover all of the issues” or deal with the overall reality.

So, Zuck’s plan to kill the fact-checking effort isn’t really all that bad. I think too many people were too focused on it in the first place, despite how little impact it seemed to actually have. The people who wanted to believe false things weren’t being convinced by a fact check (and, indeed, started to falsely claim that fact checkers themselves were “biased.”)

Indeed, I’ve heard from folks at Meta that Zuck has wanted to kill the fact-checking program for a while. This just seemed like the opportune time to rip off the band-aid such that it also gains a little political capital with the incoming GOP team.

On top of that, adding in a feature like Community Notes (née Birdwatch from Twitter) is also not a bad idea. It’s a useful feature for what it does, but it’s never meant to be (nor could it ever be) a full replacement for other kinds of trust & safety efforts.

The Bad

So, if a lot of the functional policy changes here are actually more reasonable, what’s so bad about this? Well, first off, the framing of it all. Zuckerberg is trying to get away with the Elon Musk playbook of pretending this is all about free speech. Contrary to Zuckerberg’s claims, Facebook has never really been about free speech, and nothing announced on Tuesday really does much towards aiding in free speech.

I guess some people forget this, but in the earlier days, Facebook was way more aggressive than sites like Twitter in terms of what it would not allow. It very famously had a no nudity policy, which created a huge protest when breastfeeding images were removed. The idea that Facebook was ever designed to be a “free speech” platform is nonsense.

Indeed, if anything, it’s an admission of Meta’s own self-censorship. After all, the entire fact-checking program was an expression of Meta’s own position on things. It was “more speech.” Literally all fact-checking is doing is adding context and additional information, not removing content. By no stretch of the imagination is fact-checking “censorship.”

Of course, bad faith actors, particularly on the right, have long tried to paint fact-checking as “censorship.” But this talking point, which we’ve debunked before, is utter nonsense. Fact-checking is the epitome of “more speech”— exactly what the marketplace of ideas demands. By caving to those who want to silence fact-checkers, Meta is revealing how hollow its free speech rhetoric really is.

Also bad is Zuckerberg’s misleading use of the word “censorship” to describe content moderation policies. We’ve gone over this many, many times, but using censorship as a description for private property owners enforcing their own rules completely devalues the actual issue with censorship, in which it is the government suppressing speech. Every private property owner has rules for how you can and cannot interact in their space. We don’t call it “censorship” when you get tossed out of a bar for breaking their rules, nor should it be called censorship when a private company chooses to block or ban your content for violating its rules (even if you argue the rules are bad or were improperly enforced.)

The Stupid

The timing of all of this is obviously political. It is very clearly Zuckerberg caving to more threats from Republicans, something he’s been doing a lot of in the last few months, while insisting he was done caving to political pressure.

I mean, even Donald Trump is saying that Zuckerberg is doing this because of the threats that Trump and friends have leveled in his direction:

I raise this mainly to point out the ongoing hypocrisy of all of this. For years we’ve been told that the Biden campaign (pre-inauguration in 2020 and 2021) engaged in unconstitutional coercion to force social media platforms to remove content. And here we have the exact same thing, except that it’s much more egregious and Trump is even taking credit for it… and you won’t hear a damn peep from anyone who has spent the last four years screaming about the “censorship industrial complex” pushing social media to make changes to moderation practices in their favor.

Turns out none of those people really meant it. I know, not a surprise to regular readers here, but it should be called out.

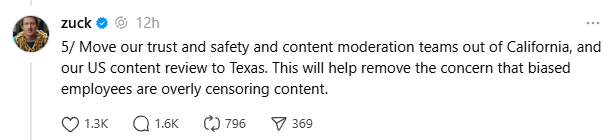

Also incredibly stupid is this, which we’ll quote straight from Zuck’s Threads thread about all this:

That’s Zuck saying:

Move our trust and safety and content moderation teams out of California, and our US content review to Texas. This will help remove the concern that biased employees are overly censoring content.

There’s a pretty big assumption in there which is both false and stupid: that people who live in California are inherently biased, while people who live in Texas are not. People who live in both places may, in fact, be biased, though often not in the ways people believe. As a few people have pointed out, more people in Texas voted for Kamala Harris (4.84 million) than did so in New York (4.62 million). Similarly, almost as many people voted for Donald Trump in California (6.08 million) as did so in Texas (6.39 million).

There are people with all different political views all over the country. The idea that everyone in one area believes one thing politically, or that you’ll get “less bias” in Texas than in California, is beyond stupid. All it really does is reinforce misguided stereotypes.

The whole statement is clearly for political show.

It also sucks for Meta employees who work in trust & safety, who want access to certain forms of healthcare or want net neutrality, or other policies that are super popular among voters across the political spectrum, but which Texas has decided are inherently not allowed.

Finally, there’s this stupid line in the announcement from Joel Kaplan:

We’re getting rid of a number of restrictions on topics like immigration, gender identity and gender that are the subject of frequent political discourse and debate. It’s not right that things can be said on TV or the floor of Congress, but not on our platforms.

I’m sure that sounded good to whoever wrote it, but it makes no sense at all. First off, thanks to the Speech and Debate Clause, literally anything is legal to say on the floor of Congress. It’s like the one spot in the world where there are no rules at all over what can be said. Why include that? Things could literally be said on the floor of Congress that would violate the law on Meta platforms.

Also, TV stations literally have restrictions known as “standards and practices” that are way, way, way more restrictive than any set of social media content moderation rules. Neither of these are relevant metrics to compare to social media. What jackass thought that using examples of (1) the least restricted place for speech and (2) a way more restrictive place for speech made this a reasonable argument to make here?

In the end, the reality here is that nothing announced this week will really change all that much for most users. Most users don’t run into content moderation all that often. Fact-checking happens but isn’t all that prominent. But all of this is a big signal that Zuckerberg, for all his talk of being “done with politics” and no longer giving in to political pressure on moderation, is very engaged in politics and a complete spineless pushover for modern Trumpist politicians.

Filed Under: automation, california, content moderation, donald trump, fact checking, joel kaplan, mark zuckberberg, texas, trust & safety

Companies: facebook, meta

Comments on “The Good, The Bad, And The Stupid In Meta’s New Content Moderation Policies”

Mike, it’s not stupid. It’s intentional. It’s about ideology (in Zuckeberg’s case) and it’s about money. Brands like McDonnalds scaling back or ditching diversity efforts entirely to appease the orange baboon and his clowns add to the shitstorm.

It’s 1938 again.

Re:

One doesn’t preclude the other.

Re:

I do not see how those are mutually exclusive.

Re: Re:

Fair point. The way I see it’s not stupid if you consider what the end result is desired.

Re: Re: Re:

This is a dichotomy in mindsets i’ve seen and experienced since i was very young.

i guess they are both valid. There can also be more nuance.

But of course, sometimes, people are idiots and geniuses at the same time because they merely happen to do the things which cause change, without any really any planning, foresight, or intelligence. (Just another option or twist on previous options.) Take Steve Jobs. He was persistent, which is about all. [Accepting loud noises about my example below.]

Re: Re: Re:2

So it’s 50-50?

Re: Re:

I fail to see how it could be intentionally stupid but intentional and stupid my be the best explanation.

It may be the proof that’s Mark is finally human, and like most of us, have no idea how things will go in the next years.

Re: Re: Re:

It may be the proof that’s Mark is finally human, and like most of us, have no idea how things will go in the next years.

👍

Something not mentioned in this article, but I wish it had been: Per Wired, Meta will now allow “allegations of mental illness or abnormality when based on gender or sexual orientation, given political and religious discourse about transgenderism and homosexuality and common non-serious usage of words like ‘weird’ ”. I fail to see how that—and some of the other changes outlined in that article—could be anything but giving in to right-wing assholes out of fear.

This comment has been flagged by the community. Click here to show it.

Re:

Yes, and?

People are free to call your kinks a mental illness. That may or may not be “hate speech” but it doesn’t matter, free speech includes “Hate speech”.

People are free to say things you don’t like. They’re even free to say things you really, really, really dislike. No matter how much you cry about it.

They don’t have to respect your pronouns, nor bake you a cake.

Re: Re:

You’ve just completely given up on hiding your bigotry, huh?

This comment has been flagged by the community. Click here to show it.

Re: Re: Re:

I have completely stopped caring whatever tf you think “bigotry” is. The entiiiiirrrrrre right side of the country has (which has grown, btw). It was clear to me that was just a political weapon years ago but you had to wear out “fascist” etc and now EVERYONE sees it.

Congrats, you played yourself.

Re: Re: Re:2

Yes, we’re all well aware that right-wing opposition to the principles of DEI is, at its root, a desire to reinstate segregation.

This comment has been flagged by the community. Click here to show it.

Re: Re: Re:3

DEI is just racial (and other) discrimination. You are the racists in this case.

Re: Re: Re:4

You forgot to add that war is peace and freedom is slavery.

What the actual fuck. How do weirdos like Mr. B here get their entire perception of the world so upside-down like this?

Re: Re: Re:5

Right-wing propaganda, poor education, a strict religious upbringing, and a general lack of empathy.

Re: Re: Re:6

Sure. Absolutely, all those things helped put them in the place where they are.

But really I think the thing that most baffles me is the mindset that keeps them there.

I didn’t have the greatest education or upbringing, yet I’ve always been curious enough to keep looking stuff up and trying to better understand.

I fail at it a lot, but I still want to keep getting better at this whole person-ing thing.

I deal with magas all the time irl. The pride some of these people have in being so insular, meanspirited, and ignorant just… it’s so alien to me, to how I think and how I see the world.

I can’t wrap my head around why anyone would want to stay so… small.

I really don’t think Fox and poor schools did that to them. Religion… it doesn’t help, but I don’t think it’s the roots of it, either.

I can tease and joke but ultimately I don’t want to hate anyone and I don’t want to think anyone’s hopeless.

But goddamn if this nakedly bigoted, willfully ignorant maga shit doesn’t constantly test that.

Re: Re: Re:7

Religion can actually be the lynchpin, at least for younger people. I was a conservative Christian raised in a conservative Christian military family up until I went to college. Once I gave up on being Christian, everything else fell away within about five years of deprogramming. It allows believers to be self-righteous and to proudly eschew facts in favor of blind misinformed faith. They train themselves to argue around facts. Anything that doesn’t fit their received world view must be wrong instead of their world view being wrong. Everything else follows.

Re: Re: Re:4

Lemme guess you totes have a black friend too!

Re: Re: Re:4

Dawww did your little racist tirade tucker you all out? See ya next time where we can discuss why no one likes you and what that has to do with your sexuality.

Re: Re: Re:2

Thank you for confirming your bigotry.

This comment has been flagged by the community. Click here to show it.

Re: Re: Re:3

I feel like you’re not getting it. No one cares

Re: Re: Re:4

Your own rant earlier in this thread proves yourself wrong.

Dear lord, you can’t even keep track of your own bullshit anymore.

Re: Re: Re:4

Tell us again how much you don’t care bro!

Re: Re: Re:2

A few small percentage point shifts in some states and you would’ve been crying about Harris being president instead.

People like you think you’re so incredibly smart with your blatant cruelty and bullying, but the only joke here is you.

I know this comment isn’t gonna reach you and change your mind, but neither is any of the garbage you’re putting into text.

Re: Re: Re:2 Stop playing (with) yourself

“Congrats, you played yourself.”

He said, just after having in fact played hisself

Re: Re:

Did you ever back that cake on Kickstarter bro?

This comment has been flagged by the community. Click here to show it.

Re: Re:

If my kinks include fucking minors, animals, or dead people, then yes. Otherwise, no.

Re: Re: Re:

Nice to see how many trolls think that a desire to fuck children is healthy. Clearly the same individuals that want to inspect children’s genitalia to prevent trans people engaging in public participation.

Re:

That’s coming in a separate article.

This comment has been flagged by the community. Click here to show it.

Re: Re:

I really don’t care dude, I’m just here to laugh at you and gloat.

Re: Re: Re:

Wasn’t talking to you, but your ego is so inflated you assume I must be.

Re: Re: Re:

Hmmm. if you ARE here to laugh at Mike, wouldn’t him comming out with another article be exactly something you care about? I mean it’s ANOTHER place for you to show off at.

Could I ask that you keep single sentence and/or 14 word strings coherent and non-self contradictory?

Otherwise you run the risk of other laughing at you.

Re: Re: Re:2

Maybe he should try limiting his character count. I’m guessing 88 letters might be the number he’s looking for.

This comment has been flagged by the community. Click here to show it.

Re: Re: Re:3

The fact that you weirdos go around counting how many words people use makes you look insane.

Re: Re: Re:4

Maybe look at the two numbers used in the comments before your reply there, then figure out The Implication™ we’re tossing in your direction. 🙃

Re: Re: Re:5

Now, that’s not fair, Stephen! You know Matty can’t count!

Re: Re: Re:6

Not entirely true! I’m pretty sure he can only count to four.

Re: Re: Re:6

Goatfucker Bennet’s the type that have trouble counting the number of ‘r’s in strawberry.

This comment has been flagged by the community. Click here to show it.

Re: Re: Re:5

I understand “the implication”, it’s a dumb af. Reading comprehension, do you have it?!

I wouldn’t even know what ANY of these numbers are if I didn’t have sh!itlibs obsessed with imaginary nazis to tell me about them. (I wouldn’t know that Soros was Jewish, either, except you yell “antisemite!” at anyone criticizing him)

Your accusations self-referential, circular and inbred. They have absolutely nothing to do with me, just your ridiculous obsessions.

Re: Re: Re:6

Son did you shit in your hand and draw this post on the nearest wall as a first draft?

Re: Re: Re:6

I doubt that.

I doubt that, too. You seem like the kind of person who is well aware of the veiled meaning of the number 1488, especially in relation to white supremacists and Nazis.

It is nigh impossible to not know Soros is Jewish if you pay even the least bit of attention to the news beyond whatever headline you read on the front page of Fox News in the morning.

Now now, that’s no way to talk about yourself.

…says the guy who keeps coming back to a website he hates so he can interact with people he hates and let nothing but negative energy flow through him so he can attempt to spread more hate and make everyone as angry and antisocial as he is, was, and always will be. Does it physically and mentally exhaust you to be so hostile and hateful all the time, to never allow yourself a moment of joy that isn’t rooted in someone else’s pain, to feel so bad about your life that you take out your frustrations on a bunch of people you’ve never even met?

Re: Re: Re:4

TIL: Bratty Matty thinks most words aren’t made up of more than one character.

Re: Re: Re:

TELL EM HOW MUCH YOU DON’T CARE BRO!

Re: Re:

Good to know. 👍

This comment has been flagged by the community. Click here to show it.

Rapid-onset political realignment

I came here to drink your tears, MM. All your ideas are bad and you are wrong (or lying) about everything.

This is just Glasnost, and as part of the far-left old party, you’re really upset about it.

FB, Twitter, YT and others became part of a censorship engine directed by the Biden administration. True things were labeled “misinformation”, “fact checks” were just some liberal journos sh!tlib opinion, and the viewpoint discrimination was poignant and real. Being conservative (socially or economically) was labeled hate speech.

And you, a far-left partisan (who maddeningly pretends to be neither) lied and pretended it wasn’t happening. You lie about what the very nature of free speech is. And now the “guy who said Facebook was not suppressing free speech announces Facebook will stop suppressing free speech”

Everything you thought was good and I hated about the modern media environment is going away and I am so here for it. Heck, in a year or two CNN and MSNBC probably won’t exist.

Zuckerberg saw what Musk was doing, the guy you hate and malign constantly and said that looks good and I should copy him.

I am so happy you’re unhappy. And your rabid followers will downvote this post and it will “hidden” and you’re still gonna read it and that makes me happy too.

A few notes:

He was using the word correctly, you often lie about what that word means.

No, suppressing things you disagree with (and they were suppressed, don’t lie about that) is not “”free speech” nor “more speech”

Strawman. Most complaints were about the FBI, CDC and others, real orgs with real enforcement power.

Literally anything besides threats of violence should be legal to say anywhere. That’s what free speech is.

Someone doesn’t know how percentages work (well, really you’re just trying to mislead). But sidenote: the reddening of far left blue states was actually really interesting to see.

Gawd, it’s gonna be a fun 4 years.

This comment has been flagged by the community. Click here to show it.

Re:

Oh, I forgot one:

That’s cuz you’re never tried to share a right wing meme on FB. It’s gonna have a HUGE impact. Routinely a meme that liberals disagreed with would be labeled “wrong” and have it’s reach suppressed. Often, cuz the liberal in question never understood meme, the “fact check” wouldn’t even make any sense.

Your ignorance is both hilarious and terrifying.

Re: Re:

Tell us again how much you don’t care bro!

Re: Re:

Forgot to take your meds?

Re:

Matthew Bennett apparently now saying CSAM is fine and shouldn’t be illegal. Quite a take!

Also, apparently, making it clear he totally disagrees with Elon Musk’s stance on doxxing, as well as his suppression of the word “cisgender.”

Also, kind of a weird stance given that just this week you mocked us for supporting TikTok users’ free speech rights.

Look, I get it: you can’t think for yourself. You have no original ideas. You have no principles at all. You’re just a “whatever Trump/Elon says must be good” kinda automaton, who cannot actually comprehend the inherent contradictions in their stances.

Re: Re:

Considering how he’s siding with the political party that wants to protect the institution of child marriage and has no problem with forcing a tender-age girl to birth the child of her rapist? I don’t think we should be that surprised.

This comment has been flagged by the community. Click here to show it.

Re: Re: Re:

Your victimhood fantasies do not actually say anything about me, believe it or not.

Re: Re: Re:2

You can look up stories of Republicans opposing bills that, were they to become law, would prevent child marriages. In fact, Republicans in Missouri did that last year. As for the “forcing raped girls to give birth” thing…well, do you really need me to hand-walk you through the consequences of a total abortion ban? That besides, you can also find plenty of Republicans who oppose allowing abortions in cases of rape/incest—like, say, Missouri Republicans, one of whom said the following about rape not being a good enough reason to justify an abortion: “God is perfect[.] … God does not make mistakes. And for some reason he allows that to happen. Bad things happen.”

I can read the news. I can use Google. If you can’t, that’s your problem. Solve it yourself.

Re: Re: Re:2

Oh you’ve told us plenty about yourself. Mostly inadvertently.

I can just start listing them if you want bro.

Let’s start simple.

Even considering the limited sexual experience you’ve had. You’ve never been able to make your partner cum.

This comment has been flagged by the community. Click here to show it.

Re: Re:

Like credible threats of violence, it’s one of the few cases where speech is actually against the law, dumbass

Doxxing is just a tool to enable a threat of violence, it’s targeting data. As used so ably against SC Justices, which you thought was fine for some reason. So yeah, Musk and I are on the same page.

What a weird way to say “we support CCP propaganda”. It’s not a free speech issue at all, you’re an idiot, which is why I was mocking you. TikTok is in fact the Chinese government routinely engaging in censorship on american citizens.

Buddy, I don’t think you do get it: I’ve won. This is what I wanted. The censorship YOU wanted is evil and is going away. It was probably going to go away anyway even without Trump winning, people were so tired of it, but now it is happening way faster, and the overton window is going to move so fast it’s gonna give you whiplash. Your ideas are being rejected in real time.

You can call me “stupid”, or that I’m being “misled” but whatever petty insult you want to level but this is really just a bunch of cope and seethe. Pretty literally.

Re: Re: Re:

And the censorship YOU want is justified and coming soon to a platform near you. Yes, we get it, you like it when things align with your ideology.

This comment has been flagged by the community. Click here to show it.

Re: Re: Re:2

Your reading comp is so, so poor.

Re: Re: Re:3

There’s that mirror commentary again.

Re: Re: Re:4

It’s a BDAC classic.

Re: Re: Re:

Dude, I was just responding to what YOU yourself said. You claimed that “Literally anything besides threats of violence should be legal to say anywhere. That’s what free speech is.”

Now, you’re moving the goalposts and saying also CSAM. Which is fine, but you just admitted that you were full of shit when you said “literally anything besides threats of violence.”

Ah, so again, your “literally anything besides threats of violence” definition is expanding to include tools that might enable threats of violence.

Under that definition, wouldn’t “hate speech” also qualify? After all, there’s WAY more evidence that hate speech can lead to violence than, say, mentioning someone’s name.

I mean, I’m just proving a point here, Matthew. These issues are more complicated than you make it out to be, and every time you make a concrete statement, it’s easy to prove you don’t understand literally anything about the issue at all, beyond what Trump/Musk are saying, which you will twist to justify no matter how hard you have to bend over backwards.

Wait, wait. So now “CCP propaganda” is also not included in your definition of free speech, which again seems like YET ANOTHER exception to your “literally anything but threats of violence” claim.

It’s almost like you haven’t thought any of this out, Matthew.

Maybe you should leave commentary on “free speech” to people who actually understand it.

You didn’t win shit. Donald Trump won, and you support him. You didn’t win shit. You’re just a braindead fan boy who has never had an original thought in his life.

You didn’t even read the fucking post, did you? I said it was good they were fixing a bunch of those policies.

One of these days, Matthew, if you ever grow up and have a single original thought, you might realize that I am the biggest free speech supporter you’ve ever come across in your life. I don’t support “censorship.” And I’ve said for years that Meta’s content moderation efforts suck, and I said above that I think that part of the announcement is good.

You’re just so wedded to complete myths. It’s embarrassing for you.

This comment has been flagged by the community. Click here to show it.

Re: Re: Re:2

Dude, no you didn’t, you made a strawman. You then spend several disingenuous paragraphs trying to justify the strawman.

I’m not reading the rest, this is pathetic. Again, I mostly just come here to mock you. But your ungenuine arguments combined with outright lying make engaging with any replies absolutely pointless. (I’d already stopped looking for replies, this article was just particularly delicious)

You’ve lost. Everything you wanted, including your burning desire to be part of the censorship complex is going away. People have seen behind the curtain and smelled the bullcrap. And I really couldn’t be happier for it cuz it was all evil. Censorship is mostly going away on FB, Bhattacharya is going to run NIH (ironic), Patel is going to rip the FBI a new one, and people with mental illness won’t be allowed in the military. Oh, Tiktok’s gone, too. (that one is funny cuz you think I agree with Trump on everything)

Go shout “bigot” and “but, I’m right” into the void. no one who matters is ever going to listen to you anymore.

Enjoy the next 4 (more likely 12) years.

Re: Re: Re:3

No, he didn’t. This was your exact wording: “Literally anything besides threats of violence should be legal to say anywhere.” Per that phrasing, it’s reasonable to believe that you support the idea of CSAM—which fits in the group of “literally anything besides threats of violence”—being “legal to [post] anywhere”. You made that post with that phrasing; don’t blame others for your mistake.

And that says a lot about you. None of it is good, but it does say a lot nonetheless.

Projection like this should come with a box of Reese’s Pieces and a collector’s cup.

Three things.

Re: Re: Re:3

“You’ve lost. Everything you wanted…”

Do you think repeating over and over and over again it will make it true for anyone but you?

Re: Re: Re:3

It’s not a strawman to repeat your LITERAL WORDS. You claimed — and I’ll quote it again — “Literally anything besides threats of violence should be legal to say anywhere. That’s what free speech is.”

I then tried to explore the implications of such a statement.

Then you responded by moving the goalposts, and (effectively) admitting that you didn’t actually mean what you said.

In short, I proved you were full of shit USING YOUR OWN WORDS. And now you’re throwing a temper tantrum.

How pathetic.

Re: Re: Re:4

I’d have us4ed the word “typical”. It’s just as accurate, but contains greater meaning.

Re: Re: Re:

See hun its throwing shit fits like this, earned you the name Crybitch.

Re: Re: Re:

Aside from how obvious it is otherwise, it’s comments like this that show how much you get your unoriginal thoughts from propaganda echo chambers. Make a throwaway email account, sign up for TikTok, start watching videos, and then tell me how much propaganda and Chinese state censorship you see. It’s videos of DnD nerds telling you what class to play based on your star sign. It’s people on the spectrum showing off their niche hobbies. It’s people speculating about 6th dimensions and alien convergences. It’s full of the speech of Americans.

But you’re a hypocrite (feature, not a bug!) because you don’t have a problem with Musk-brand censorship on extwitter.

Do you think the TikTok marketplace where you can buy stuff is a secret communist plot to convert Americans through blatant capitalist activities?

For shits and giggles, I just searched for “tiananmen square” on TikTok and found uncensored videos about the massacre. I searched for “free tibet” and “independent tawain” and found plenty of videos that were critical of the Chinese government.

What’s the censorship you’re referring to?

And of course you’re happy and got everything you wanted. You were told what you wanted by your echo chamber. And when the cognitive dissonance inevitably sets in when something isn’t perfect, you’ll just fall back to blaming the evil libs for something that they can’t possibly have caused. You won’t admit it here when it happens, so I’ll just say it now: you got everything you thought you wanted and everything that goes wrong will be a consequence of that.

Re:

There’s been a lot written about parasocial relationships and how freaking weird people who get majorly invested in them, so this is just a friendly reminder that having inordinate animus towards a journalist who you have never met is also parasocial and is also weird.

Re:

Keep on brekkie gone much you don’t care. Yell it on the rooftops at the top of your lungs if you have too!

So, why Texas instead of, say, Florida? Would it make it easier for potential new legal actions to go through the Fifth Circuit?

Re:

I imagine there could also be financial reasons.

Zuckerberg is competing with Musk to see who can suck Trump’s rapist cock harder.

This comment has been flagged by the community. Click here to show it.

Re:

Careful now, if he can find out who you are you might wind up owing him $15M.

Re: Re:

Ah, so we have found yet another exception to the rule you stated above that “literally only threats of violence” shouldn’t be considered free speech.

You didn’t mention defamation did you.

Also, you don’t know how defamation works if you think that’s defamation. And, no, ABC’s capitulation doesn’t prove that the statement here is defamation because (1) it was a capitulation in a case ABC likely would have won if they wanted to fight it and (2) even if it was, it was the very specific manner of what GS had said, claiming that a jury had convicted him of rape that was the issue.

But again, your brain is pickled by MAGA memes and nonsense, so the actual details of literally anything about actual free speech elude you.

Re: Re:

First, diaper-shitting senile moron Donald Trump isn’t capable of any form of research or investigation. It’s as far beyond his pitifully feeble intellectual abilities as quantum mechanics is beyond my dog. So it’s not that Trump would find out who I am, it’s that one of his lackeys might do so.

Second, I would absolutely welcome litigation over this, because — as is often noted — discovery is a bitch.

This comment has been flagged by the community. Click here to show it.

Losing Their Grip

If removing restrictions on speech is political, then the placement of the restrictions in the first place was political. We knew all along that there was a bias.

Re:

And now we know for sure that the bias leans in the direction of conservatives/right-wingers. I mean, we kind of already knew that when Meta and Twitter bent over backwards to avoid banning major conservative figures (e.g., Donald Trump) for TOS violations that would get anyone else banned in a heartbeat. But it’s nice to have the proof laid out for everyone to see.

Re:

Shut up Kdawg. It’s Crybitches turn to get the attention he so desperately craves.

Checking 1... 2... 3...

Facts are inconvenient, they could go against your political or religious beliefs.

This comment has been flagged by the community. Click here to show it.

Re:

I don’t think you are making the point you think you are, which I find delicious.

Re: Re:

Go back a kake brah

Re: Re:

Look at that pigeon prancing through his shit on the chessboard! How majestic!

Re: Re:

The only truthful words you have ever spoken.

Could be good, could be bad

We have no idea how the system would work, but it could be good or bad,

But generally I think it could be good from a kafka esk perspective.

Before people were mad at fact checkers, but now what random people on facebook.

Re:

Two things.

Re: Re:

Let me how its could be akin to kafka esque:

If there is a penalty system, it would further the idea.

facebook has been partially affiliated with two prior lawsuit where a poster tried to sue because they were fact checked.

According to one they tried to appeal it to both,

of course, the next option was to sue if appealing didnt work.

However if it community notes that option could possibly exist as the person who noted you could be: JustTurnedIntoACockroachAnon420

The trial is way better than metamorphosis

Re: Re: Re:

…fucking what

Re: Re: Re:2

Look I just think it would be funny if the new fb community notes could demonetize big conservative posts on facebook, and have no way to resolve it because they got noted by a rando.

B**** better have my money

Since FB makes nearly all of its money from advertising, how will this be affected? In reality, little may change in FB but if advertisers perceive changes that put their message in a bad environment, or they start getting negative blowback from consumers, will they go running to the doors like they did with Elmo?

This comment has been flagged by the community. Click here to show it.

To where? There’s nowhere else.

They’ve mostly returned to Twitter, btw.

Re:

Source.

Re: That darn Elmo

I’ve owned media companies. There is always somewhere else.

X is still losing ad revenue and Elmo is still losing $$ hand over fist. If he wasn’t, he wouldn’t be whining about ad “boycotts”

https://www.socialmediatoday.com/news/x-formerly-twitter-shares-q5-marketing-tips/735593/

Re:

Returning to Twitter is the social media equivalent of an abuse victim returning to their ex.

It’s not healthy man.

Re: Re:

Why does everyone deadname X

Re:

Nah bra. That just all the bots you claimed were overrunning Kickstarter.

“It’s like the one spot in the world where there are no rules at all over what can be said.”

Well, actually, I think quite a few legislative bodies have similar policies (at least from memory).

Wow, this great!

Now I will be allowed to post all sorts of derogatory comments about how Donald is insane and all his maga followers are also insane. FaceBook will no longer take down my opinions about how fucked up the world is.

Example #123456 on why you can’t trust the market to function well as a motivator for content moderation 1. ( 2

Eh, it doesn’t really have to. A lot of mainstream “fact checkers” fall into that trap, but it’s entirely self-inflicted. It’s possible to approach fact checking in a nuanced and context dependent way.

Meta has been a Nazi Bar well before this. It’s now a Nazi Bar with giant glowing neon swastikas. The people who’ve been working at Meta all this time, and the ones that see it as fine to continue working there, are collaborators.

Zuckerberg is a convicted rapist Nazi. His employees are all little Nazi stooges. Fuck his company and everyone that works there that isn’t trying to actively find another place to go. Actually, fuck everyone who thought that now was the time to jump ship, and not the multitude of other shit that Facebook was a part of, especially years ago when it became clear that Zuck was fine with genocide in places Myanmar.

Re:

Funny, I searched “Zuckerberg rape conviction” and didn’t come up with anything where he was even charged. Where are you getting your info?

Pain is Coming

Sucker-berg will have to pay the piper. All of those direct lies to congress. He has won the opportunity to see what happens when his industry is changed because he was unable to do the right thing and be fair on how they addressed censoring one side of politics.

Look I just think it would be funny if the new fb community notes could demonetize big conservative posts on facebook, and have no way to resolve it because they got noted by a rando.

'I appeased the leopards, surely my face is safe now?'

At this point Zuckerberg could get himself a collar with a tag reading ‘Trump’s Tool’ and it would be less of a give-away of how much convicted felon Trump owns him.

The best part of this cowardly capitulation of course is that it won’t actually save him, they’ll still be slagging on him and dragging him over the coals for being ‘anti-conservative’ because why wouldn’t they? He’s not dealing with people who are at all acting in good faith and since social media makes a great punching bag and way to rile up their supporter why would they change that now just because he grovelled and kissed the boot?

Is it not reasonable to presume that people seeking employment as content moderators fall into the sets of people that are in favour of censorship, and the people that just want a job / don’t give a shit.

Re:

No one is “seeking employment” as a content moderator. It’s one of the most horrible jobs in the world. People are taking the job because they need the money.

Re: Re:

I did.

That depends on the people whose posts one has to moderate. The forum for autistic people I moderated was less horrible, more fairly uncomplicated.

Mine was a purely voluntary position, meaning I did it because I enjoyed, not for the money.

Re: Re: Facebook is toxic

to its people and puts them in toxic roles. It doesn’t have to be awful and I know people who do good work and enjoy it in communities where they’re empowered to make decisions and make the place better.

Heck, look at all the people who moderate Facebook groups, or on Reddit, for free.

Re: Re:

Volunteering to moderate a community group is not the same as ‘seeking employment’ as a moderator.

I shouldn’t need to spell that out folks.

Re: Re: Re:

TIL: Volunteers do no work. How do we sign people up to vote in elections?

Re: Re: Re:2

Volunteering to be a content moderator on a small website is not the same as seeking employment as a content moderator on a site like Facebook. You know this, so stop acting like you don’t. Being an idiot on purpose still makes you an idiot.

Re: Re: Re:3

Volunteering to be a moderator on Facebook is seeking unpaid employment as a moderator on Facebook. You know this, so stop acting like you don’t. Being a know-nothing on purpose still makes you a know-nothing.*

FYI, I didn’t respond to Drew, but you carry on pretending ACs are all one person if that makes you feel better.

*Not repeating your toxic language targeting people with profound intellectual disability.

Re: Re: Re:4

Facebook might allow people to moderate their own little groups and all, but the bigger job of “moderating all of Facebook” goes to the people paid to deal with (and receive therapy for) taking down content like beheading videos and CSAM.

My usage of “idiot”—which I do try to use as minimally as possible anyway—isn’t meant to disparage anyone but the person I call an idiot. Acting like I’m saying the R-word whenever I call someone an idiot isn’t going to work here, so you can stop trying to weaponize the language of inclusiveness that you and your right-wing brethren otherwise hate.

Re: Re: Re:5

Says the ableist POS excusing his weaponizing of people with profound intellectual disability by using a former medical term created to describe them. If anyone’s on the far right in this, it’s you. But as they often say here: Every accusation a confession.

This comment has been flagged by the community. Click here to show it.

Most users don’t run into content moderation all that often

Most straight white male users don’t run into content moderation all that often. For African users, women users and LGBTQ users the reality is quite different.

Re:

Do you have some data on that?

Re: Re:

Only bullshit he pulls out of his white (self?)-hating ass

Fundamentally, Facebook's approach to moderation

is to rely on algorithms and machines. Sometimes they put people in that loop but only with rigid scripts that seem to be written by people trained as engineers instead of people trained as sociologists or historians or journalists or in any other field that knows about human behavior.

First, the machines take out content that offended no one or are not actually harmful (ex: “men are scum” in a thread where people are commiserating about a particular incident, or even, my favorite, “what a cute little heifer” about someone’s actual baby bovine.)

Second, people are adept at figuring out the rules and walking around them with euphemisms and nuance that become oddly even more caustic because now it’s an in-joke, a secret shared among the special people, creating more tribalism that increases the toxicity of relationships between people or groups.

Pick good people, empower them to know it when they see it, have them constantly update rules, and give them good tools to manage the system. If no one complained, the content probably isn’t a problem. If people complain fraudulently, take away their ability to complain, and maybe kick them from the platform.

Oh except that hurts unending growth, kicking off horrible people who lie and cheat and do harm. I can see the problem.

Finally, their inability to correct mistakes, big mistakes, is legendary. Like literally telling dozens of people that no, really, their friend truly has turned to a new life of cryptocurrency from a totally new IP rather than do what should have taken 5 minutes to ascertain and fix.

tl;dr version

Everyone stop using social media now. It’s not going to improve. It’s becoming a hopeless cesspit for good. Leave the Nazis to their Nazi bar.

Re:

At this point, the only winning move is not to play.

This is why I advocate for a return to personal websites and such. Bring back the noble web ring! Revive the prominence of RSS!

This was helpful for the "no fact checking" freak outs

Hi Mike-

Followed you for a long time. Thanks for this article. Ever since “alternative facts” language entered the discourse 8 some years ago, any move against facts riles me up. I have to think more about what you say here but these points are clarifying. I do wonder if what’s needed, instead of the drastic shift to community notes, is a balance of fact-checking supported by community notes, or a new process for fact checking that acknowledges context. I don’t know if one and not the other is best.

Minor correction

The Speech or Debate Clause does allow speech to be limited by what rules the House and Senate themselves set for debate, which can include not bringing up certain subjects at all (like how in the 1830s or so they barred any discussions of slavery for a few years), or the rule that members may not refer to each other in speeches by name nor directly criticize the president.

Re:

I’d have got around that rule by instead discussing “indentured servitude”. I wonder how far up their asses those politicians’ heads were so they didn’t catch it?

https://www.jordynzimmerman.com

Which it doesn’t do at all, putting you on completely the wrong side of history. Just as the term “moron” was created to describe people like me in psychiatric and legal classifications, so the word “idiot” was used to describe people who tested with much lower IQs than I do. But I suppose you’ll accuse me of “weaponizing the language of inclusion” just to silence me when it’s you that has right wing brethren, not the anonymous commenter who you shit on for advocating for members of my minority group.